由于技术能力的限制,平时会遇到一些自己觉得非常诡异的问题,感觉到莫名其妙。其实到头来发现,归根结底还是自己的认知问题:可能是技术水平不够,或者考虑不周全,甚至是一些低级别的错误判断。总而言之,遇到这些问题后,有时候请教人、查资料之后仍旧不得解,只能先记录下来,留做备注说明,等待以后解决。当然,随着时间的流逝,有些问题可能就被忘记了,有些问题在之后的某一个时间点被解决了。本文就是要记录这些问题,并在遇到新问题或者解决老问题之后,保持更新。

常用链接

在这里先列出一些常用的网站链接,方便查看:

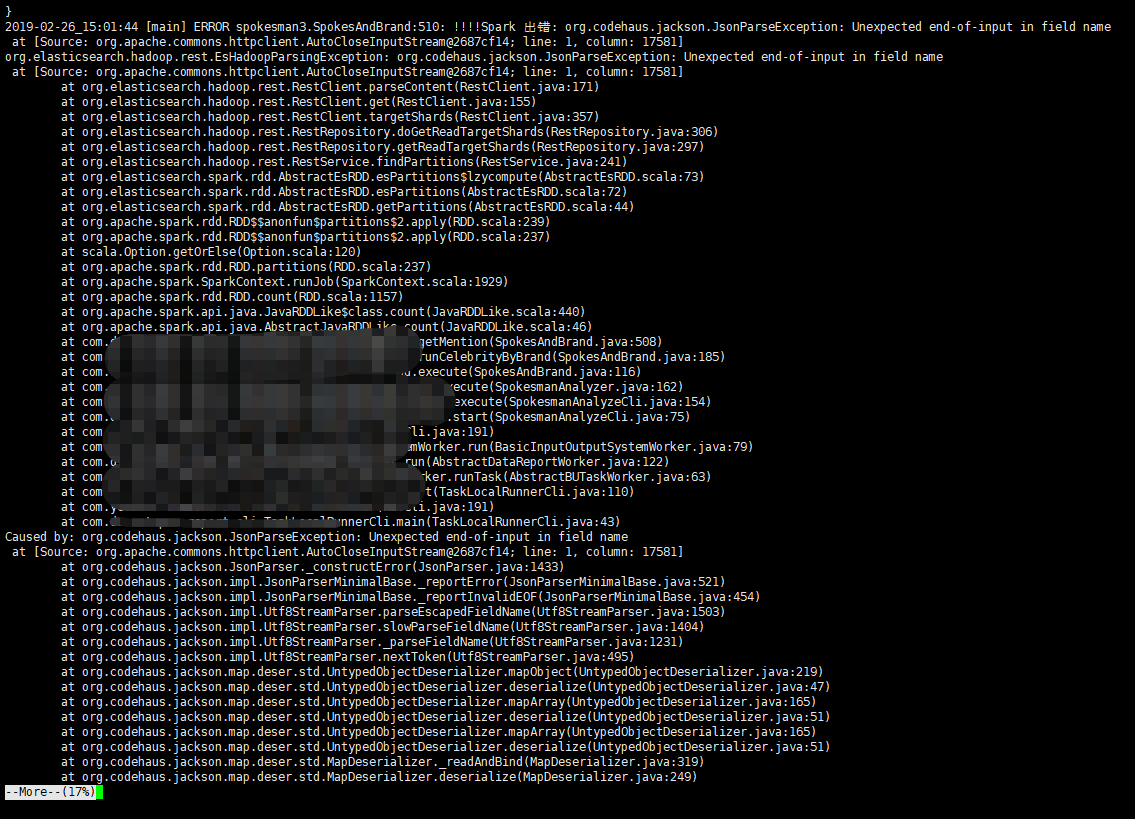

es-spark 读取 es 数据后 count 报错

使用 es-hadoop 组件,起 Spark 任务去查询 es 数据,然后过滤,过滤后做一个 count 算子,结果就报错了。而且,在报错后又重试了很多次(5 次以上),一直正常,没法重现问题。这个任务需要经常跑,以前从来没遇到过这样的异常,初步怀疑是 es 集群不稳定,具体原因不得而知。

错误截图:

完整错误信息如下(重要包名称被替换):

1 | 2019-02-26_15:01:44 [main] ERROR spokesman3.SpokesAndBrand:510: !!!!Spark 出错: org.codehaus.jackson.JsonParseException: Unexpected end-of-input in field name |

Hexo 生成 html 静态页面目录锚点失效

我这些所有的博客文档是先写成 Markdown 文件,然后使用 Hexo 渲染生成 html 静态页面,再发布到 GitHub Pages 上面,还有一些是发布到我自己的 VPS 上面(为了百度爬虫)。

但是最近我发现一个现象,有一些文章的锚点无效,也就是表现为目录无法跳转,例如想直接查看某一级目录的内容,在右侧的 文章目录 中直接点击对应的标题,不会自动跳转过去。这个问题我发现了很久,但是一直没在意,也没有找到原因。最近才碰巧发现是因为标题内容里面有空格,这才导致生成的 html 静态页面里面的锚点失效,我随机又测试了几次其它的页面,看起来的确是这样。下面列出一些示例:

1 | https://www.playpi.org/2019022501.html ,Hexo 踩坑记录的 |

但是,我又发现其他人的博客,目录标题内容中也有空格,却可以正常跳转,我很疑惑。现在我猜测是 Hexo 的问题,或者哪里需要配置,等待以后的解决方法吧。别人的博客示例:https://blog.itnote.me/Hexo/hexo-chinese-english-space/ 。

邮件依赖的诡异异常

在项目中新引入了邮件相关的依赖【没有其它任何变化】,这样就可以在需要时发送通知邮件,依赖内容如下:

1 | <!-- 邮件相关依赖 --> |

然后神奇的事情发生了,实际执行时,程序抛出异常【去掉这个依赖则正常】:

1 | Exception in thread "main" java.lang.StackOverflowError |

而根据这个异常信息,我搜索不到任何有效的信息,一直无法解决。最后,我对比了其它项目的配置,发现 手动设置 maven-assembly-plugin 插件的版本为

Python 入门踩坑

在一开始使用 Python 的时候,没有使用类似 Anaconda、Winpython 这种套件来帮我自动管理 Python 的第三方工具库,而是从 Python 安装开始,用到什么再用 pip 安装什么。整个过程真的可以把人搞崩溃,工具库之间的传递依赖、版本的不兼容等问题,令人望而却步,下面给出一些难忘的经历。

出现错误:

1 | Install packages failed: Installing packages: error occurred |

需要先手动安装 numpy+mkl,再手动安装 scipy,下载文件链接:http://www.lfd.uci.edu/~gohlke/pythonlibs 。我下载了 2 个文件:numpy-1.11.3+mkl-cp27-cp27m-win32.whl、scipy-0.19.0-cp27-cp27m-win32.whl,然后手动安装。

一开始我下载的是 64 位的安装包,结果发现我的 Windows 安装的 Python 是 32 位的,导致不支持【下载时没有选择位数,直接下载的默认的包】。另外,直接进入 Python 的命令行环境时也会打印出版本信息的。使用 import pip; print (pip.pep425tags.get_supported ()); 可以获取到 pip 支持的文件名和版本。

注意安装 scipy 之前还需要各种第三方库,官方介绍:Install numpy+mkl before installing scipy.。在 Shell 中验证安装第三方库是否成功,例如 numpy:from numpy import *。

scipy 包安装:pip install scipy==0.16.1【不推荐】,成功完成安装,如果缺少第三方包会报很多错误。网上查询后的总结:安装 numpy 后安装 scipy 失败,报错:numpy.distutils.system_info.NotFoundError,一般是缺少一些系统库,需要安装:libopenblas-dev、liblapack-dev、libatlas-dev、libblas-dev。

常见第三方库介绍:

- pandas,分析数据

- sklearn,机器学习,各种算法

- jieba,分词工具

- gensim nlp word2v,模块训练词向量模型

- scipy,算法库,数学工具包

- numpy,数据分析

- matlptop,图形可视化

Python 中的编码:

2.X 版本,python 编码过程: 输入 –> str –> decode –> unicode –> encode –> str –> 输出。

3.X 版本,不一样,直接是 unicode。

Python 中代码有 print u’xx’ + yy,yy 是中文,直接跑的时候打印到 Shell 不报错,但是使用后台挂起跑的时候,重定向到文件时,会报错,因为 Python 获取不到输出流的编码。

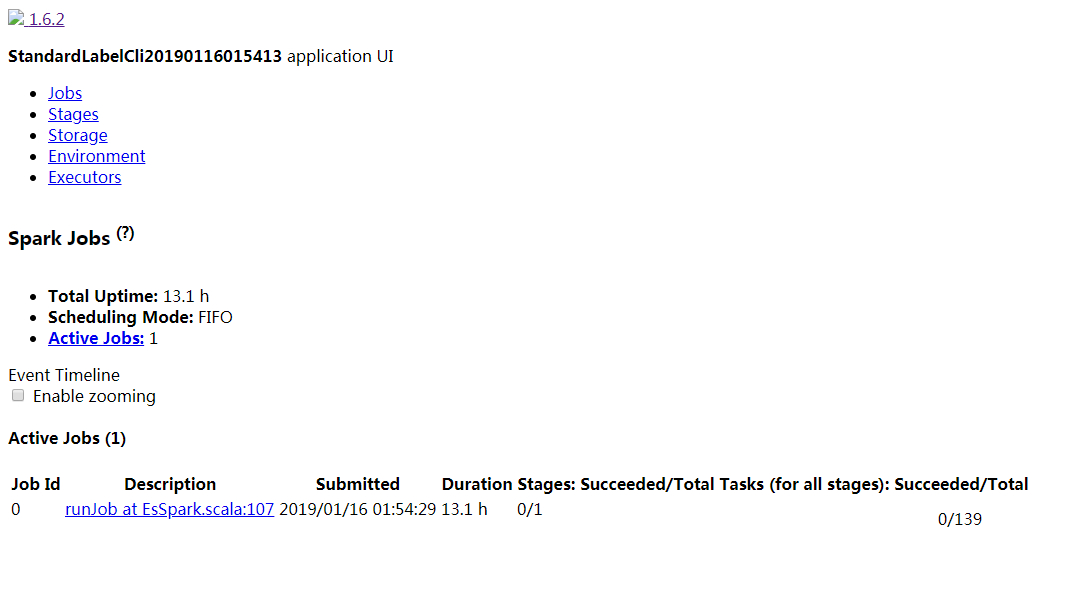

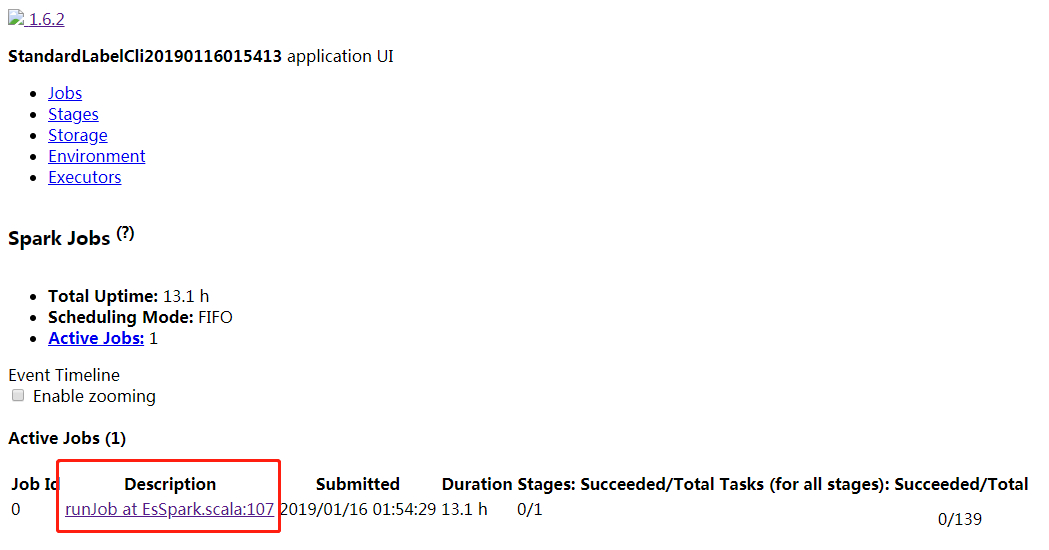

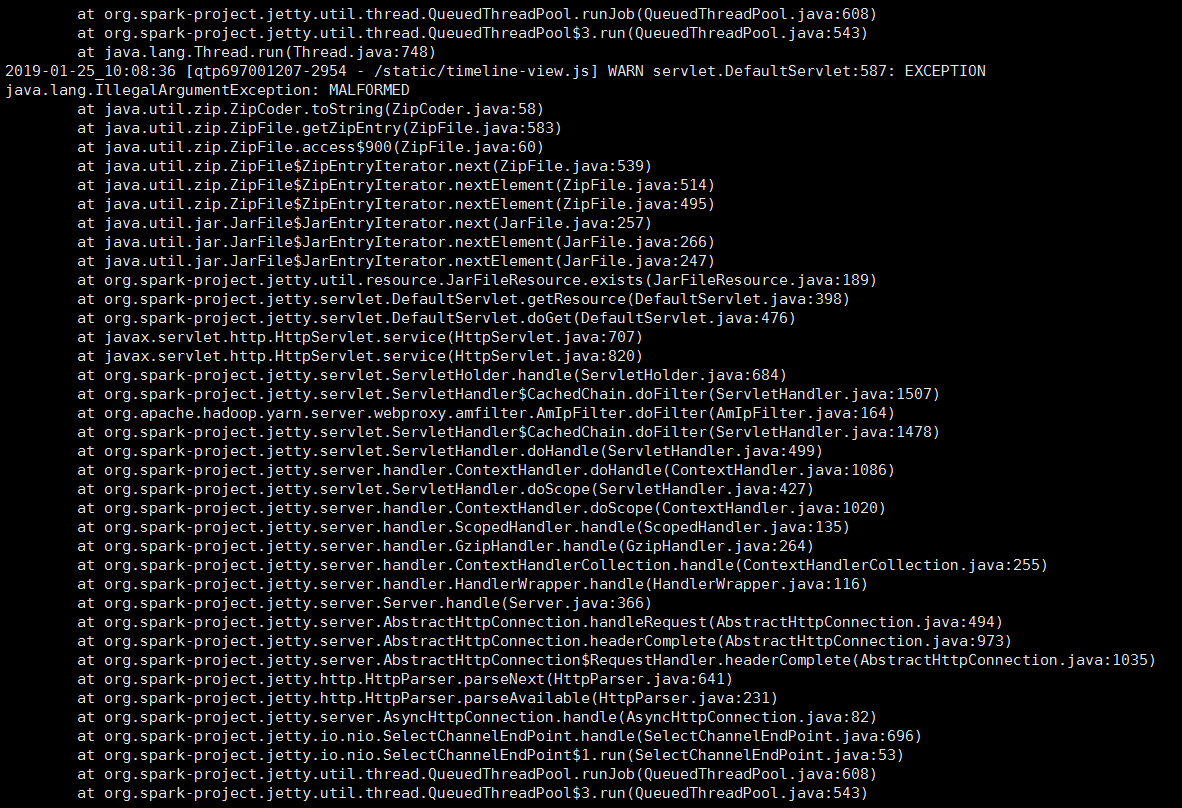

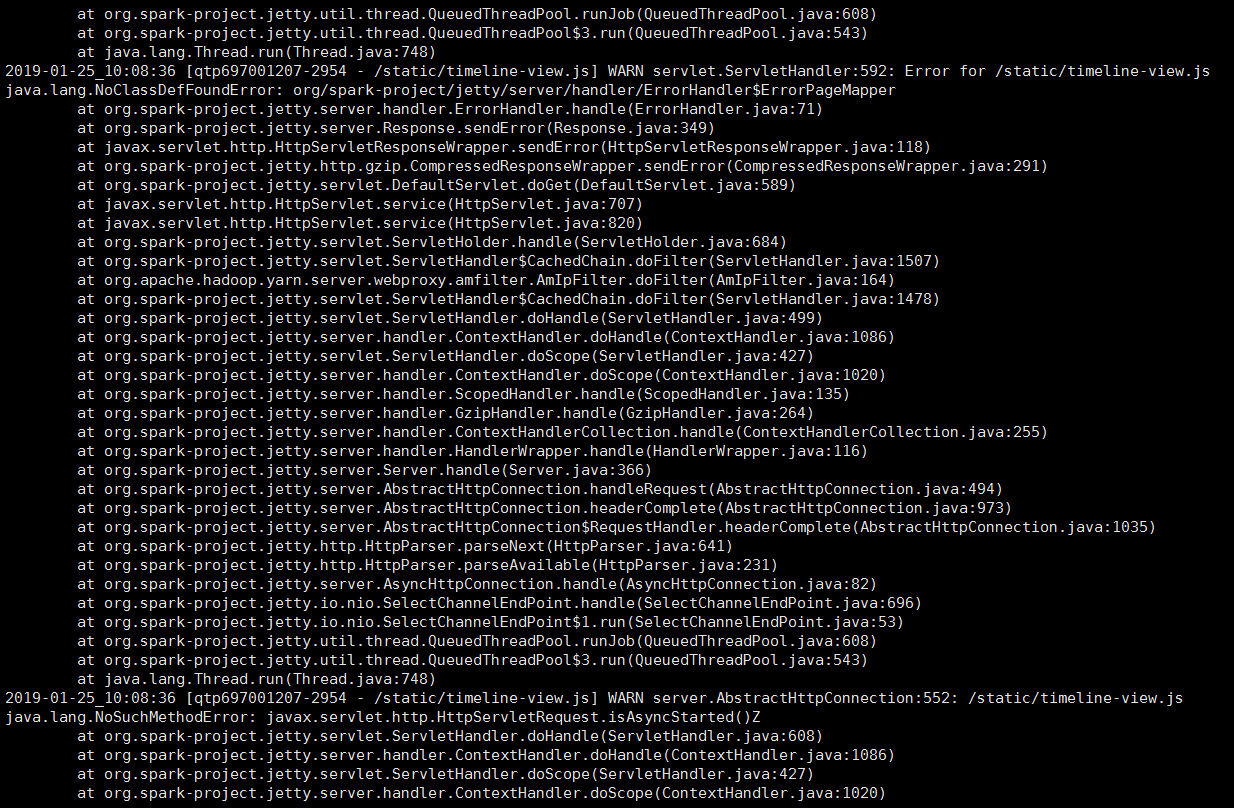

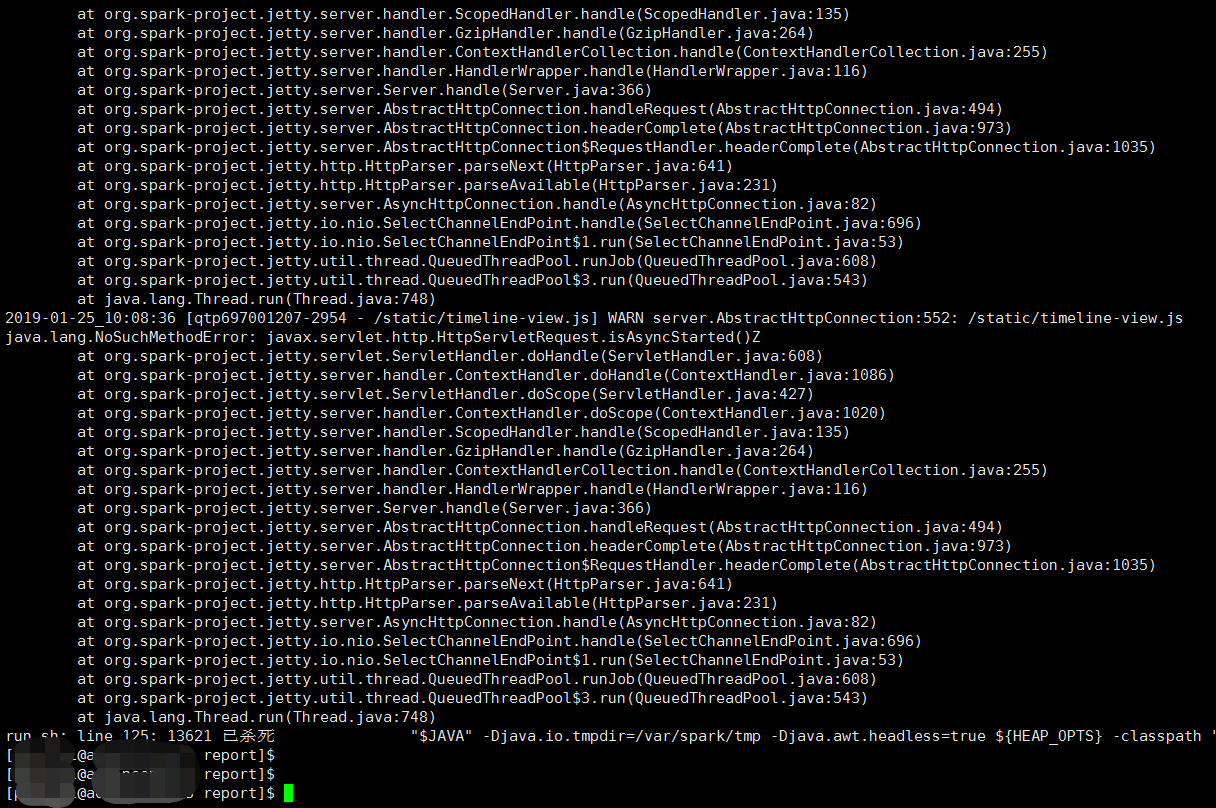

Spark UI 无法显示

使用 yarn-client 模式起了一个 Spark 任务,在 Driver 端看到异常日志:

1 | 2019-01-16_14:53:31 [qtp192486017-1829 - /static/timeline-view.js] WARN servlet.DefaultServlet:587: EXCEPTION |

这个日志在反复打印,也就是在任务的运行过程中,一直都有这个错误。它引发了什么问题呢,我检查了一下,对 Spark 任务的实际功能并没有影响,任务跑完后功能正常实现。但是,我发现在任务的运行过程中,Spark UI 页面打开后不正常显示【异常信息的开头就是关于某个 js 文件问题】:

点击进去,直接显示 Error 500:

服务器的 Driver 端日志截图:

日志截图 1

日志截图 2

日志截图 3

等了几天,又遇到同样的问题,除了这 2 次,其它时间点就没遇到过了:

1 | 2019-01-24_22:51:49 [qtp697001207-1591 - /static/spark-dag-viz.js] WARN servlet.DefaultServlet:587: EXCEPTION |

此外,还有一点值得注意,Chrome 浏览器的某些端口是禁止访问的,所以遇到过有一个 Spark 任务使用了 4045 端口【locked】,在 Chrome 浏览器是看不了任务状态的,页面无法打开,被 Chrome 浏览器屏蔽了,此时并不是 Spark 的问题。

关于 Git 的小问题

1、本地版本落后,而且又与远程仓库冲突,git pull 报错警告,需要 merge,无法直接更新最新版本。下面的操作直接覆盖本地文件,强制更新到最新版本,本地未提交的更改会丢失。

1 | git fetch --all |

2、在 2018 年 9 月的某一天,发现 Git 的代码推送总是需要输入帐号和密码,哪怕保存下来也不行,每次 push 都需要重新输入,感觉很奇怪。后来发现是版本太旧了,当时的版本是 v2.13.0,升级后的版本是 v2.18.0,升级后就恢复正常了。后来无意间在哪里看到过通知,说是 TSL 协议升级了,所以针对旧版本强制输入用户名密码,升级就可以解决。

备注一下,HTTPS 是在 TCP 和 HTTP 之间增加了 TLS【Transport Layer Security,传输层安全】,提供了内容加密、身份认证和数据完整性三大功能。TLS 的前身是 SSL【Secure Sockets Layer,安全套接字层】,由网景公司开发,后来被 IETF 标准化并改名。