在常规的 Spark 任务中,出现警告:Not enough space to cache rdd_0_255 in memory! (computed 8.3 MB so far),接着任务就卡住,等了很久最终 Spark 任务失败。排查到原因是 RDD 缓存的时候内存不够,无法继续处理数据,等待资源释放,最终导致假死现象。本文中的开发环境基于 Spark v1.6.2。

问题出现

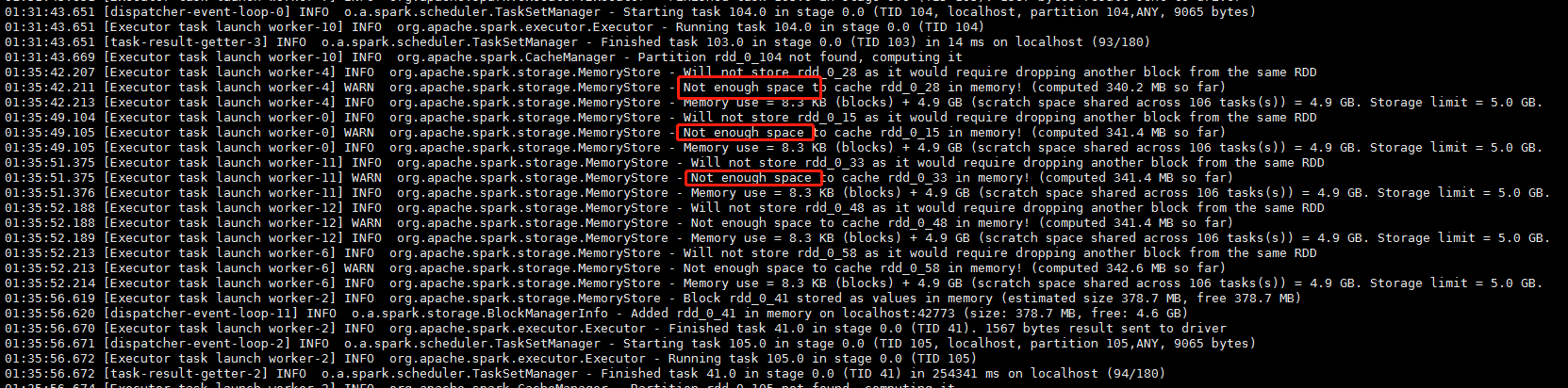

在服务器上面执行一个简单的 Spark 任务,代码逻辑里面有 rdd.cache () 操作,结果在日志中出现类似如下的警告:

1 | 01:35:42.207 [Executor task launch worker-4] INFO org.apache.spark.storage.MemoryStore - Will not store rdd_0_28 as it would require dropping another block from the same RDD |

看起来这只是一个警告,显示 Storage 内存不足,无法进行 rdd.cache (),等待一段时间之后,Spark 任务的部分 Task 可以接着运行。

但是后续还是会发生同样的事情:内存不足,导致 Task 一直在等待,最后假死【或者说 Spark 任务基本卡住不动】。

里面有一个明显的提示:Storage limit = 5.0 GB.,也就是 Storage 的上限是 5GB。

问题分析解决

查看业务代码,里面有一个:rdd.cache (); 操作,显然会占用大量的内存。

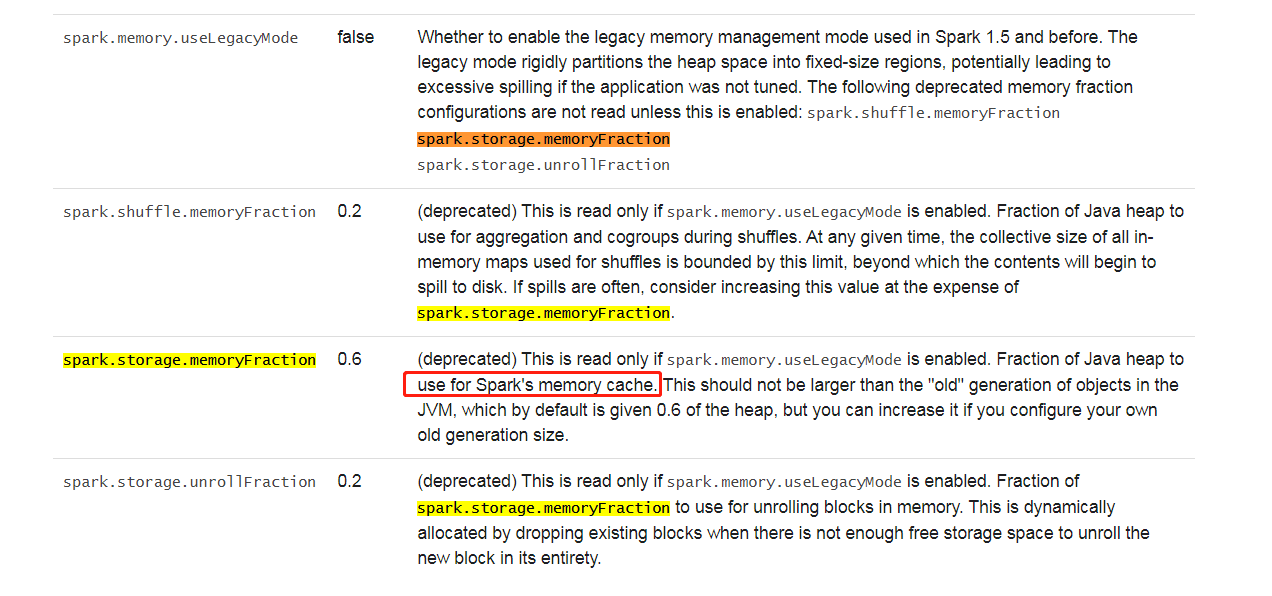

查看官方文档的配置:1.6.2-configuration ,里面有一个重要的参数:spark.storage.memoryFraction,它是一个系数,决定着缓存上限的大小【基数是 spark.excutor.memory】。

(deprecated) This is read only if spark.memory.useLegacyMode is enabled. Fraction of Java heap to use for Spark’s memory cache. This should not be larger than the “old” generation of objects in the JVM, which by default is given 0.6 of the heap, but you can increase it if you configure your own old generation size.

另外还有 2 个相关的参数,读者也可以了解一下。

读者可以注意到,官方是不建议使用这个参数的,也就是不建议变更。当然如果你非要使用也是可以的,可以提高系数的值,这样的话缓存的空间就会变多。显然这样做不合理。

那有没有别的方法了呢?有!当然有。

主要是从缓存的方式入手,不要直接使用 rdd.cache (),而是通过序列化 RDD 数据:rdd.persist (StorageLevel.MEMORY_ONLY_SER),减少空间的占用,或者直接缓存一部分数据到磁盘:rdd.persist (StorageLevel.MEMORY_AND_DISK),避免内存不足。

我下面演示使用后者,即直接缓存一部分数据到磁盘,当然,使用这种方式,Spark 任务执行速度肯定是慢了不少。

我这里测试后,得到的结果:耗时是以前的 3 倍【可以接受】。

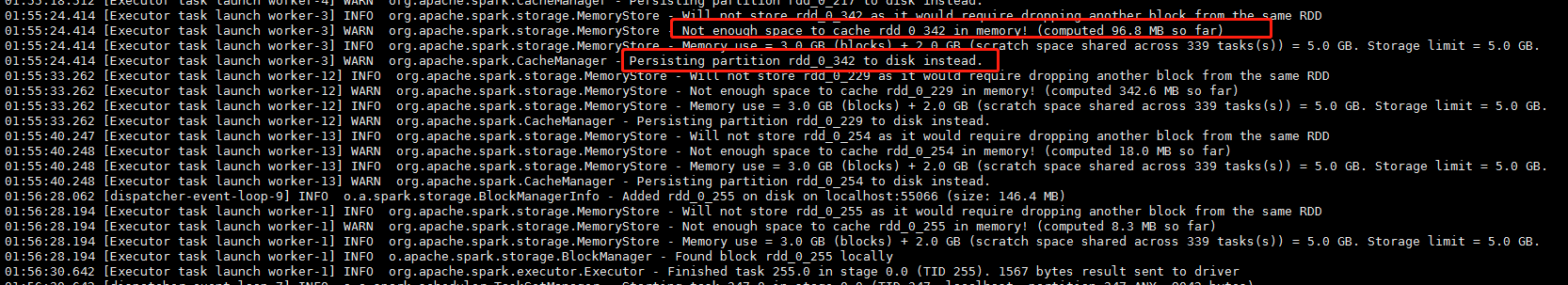

再接着执行 Spark 任务,日志中还是会出现上述警告:Not enough space to cache rdd in memory!,但是接着会提示数据被缓存到磁盘了:Persisting partition rdd_0_342 to disk instead.。

1 | 01:55:24.414 [Executor task launch worker-3] INFO org.apache.spark.storage.MemoryStore - Will not store rdd_0_342 as it would require dropping another block from the same RDD |

备注

综上所述,有三种方式可以解决这个问题:

- 提高缓存空间系数:

spark.storage.memoryFraction【或者增大spark.excutor.memory,不建议】 - 使用序列化

RDD数据的方式:rdd.persist (StorageLevel.MEMORY_ONLY_SER) - 使用磁盘缓存的方式:

rdd.persist (StorageLevel.MEMORY_AND_DISK)